kubeedge

kubeedge部署

简介

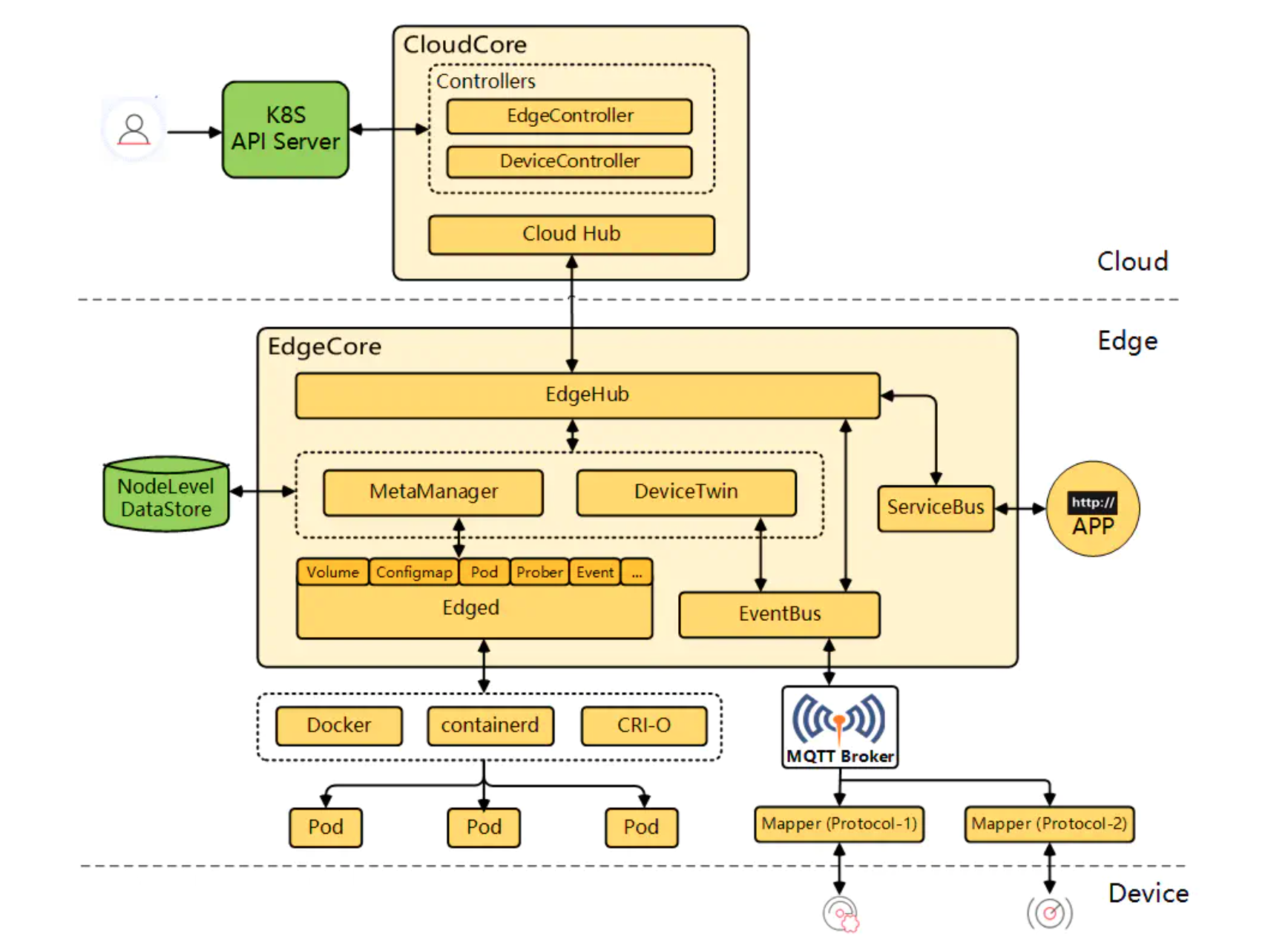

KubeEdge 是一个致力于解决边缘场景问题的开源系统,在 Kubernetes原生的容器编排和调度能力之上,实现了云边协同、计算下沉、海量边缘设备管理、边缘自治等能力。

架构图如下所示

CloudHub组件解决了双向不能通讯的问题,维护节点间的联系

部署kubeedge组件

使用kubersphere开启kubeedge组件

编辑ClusterConfiguration ks-installer

edgeruntime:

enabled: true

kubeedge:

cloudCore:

cloudHub:

advertiseAddress:

- '192.168.10.6' # cloudHub地址

service:

cloudhubHttpsNodePort: '30002'

cloudhubNodePort: '30000'

cloudhubQuicNodePort: '30001'

cloudstreamNodePort: '30003'

tunnelNodePort: '30004'

enabled: true

iptables-manager:

enabled: true

mode: external

如果advertiseAddress不方便暴露,可以创建本地回环地址,通过frp将cloud的Service端口暴露出来。

ifconfig eth0:1 192.168.10.6 netmask 255.255.255.0 up

该配置重启之后会消失,需要在启动时自动执行此命令

添加边缘节点

初始化边缘节点(ubuntu)

# 安装docker运行时,最好使用docker,官方文档说明EdgeMesh只支持docker, 需要Docker v19.3.0 或更高版本

sudo apt install docker.io

sudo mkdir /etc/docker

cat <<EOF | sudo tee /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

# 流量转发

sudo echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

sudo sysctl -p

echo "check result"

sudo sysctl -p | grep ip_forward

在kubesphere console页面获取节点命令

在节点上执行初始化edge节点命令

MQTT is installed in this host

kubeedge-v1.9.2-linux-amd64.tar.gz checksum:

checksum_kubeedge-v1.9.2-linux-amd64.tar.gz.txt content:

[Run as service] start to download service file for edgecore

[Run as service] success to download service file for edgecore

kubeedge-v1.9.2-linux-amd64/

kubeedge-v1.9.2-linux-amd64/edge/

kubeedge-v1.9.2-linux-amd64/edge/edgecore

kubeedge-v1.9.2-linux-amd64/version

kubeedge-v1.9.2-linux-amd64/cloud/

kubeedge-v1.9.2-linux-amd64/cloud/csidriver/

kubeedge-v1.9.2-linux-amd64/cloud/csidriver/csidriver

kubeedge-v1.9.2-linux-amd64/cloud/admission/

kubeedge-v1.9.2-linux-amd64/cloud/admission/admission

kubeedge-v1.9.2-linux-amd64/cloud/cloudcore/

kubeedge-v1.9.2-linux-amd64/cloud/cloudcore/cloudcore

kubeedge-v1.9.2-linux-amd64/cloud/iptablesmanager/

kubeedge-v1.9.2-linux-amd64/cloud/iptablesmanager/iptablesmanager

KubeEdge edgecore is running, For logs visit: journalctl -u edgecore.service -b

出现以上命令说明初始化完成,但不一定成功,需要对配置文件进行修改

kubeedge的配置文件在/etc/kubeedge/config/edgecore.yaml

edged:

cgroupDriver: systemd

...

部署一个nginx测试

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx

name: nginx

ports:

- containerPort: 80

protocol: TCP

hostPort: 8089

tolerations:

- key: "node-role.kubernetes.io/edge"

operator: "Exists"

effect: "NoSchedule"

在节点上测试(可将ip地址替换为节点的地址)

curl 127.0.0.1:8089

配置iptables守护进程

部署完成后,发现 DaemonSet 资源 iptables 未调度到 k8s-master 节点上,需要配置容忍 master 污点

找到 " 应用负载 "-" 工作负载 "-" 守护进程集 ",编辑 "cloud-iptables-manager" 添加如下配置:

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: cloud-iptables-manager

namespace: kubeedge

spec:

template:

spec:

......

# 添加如下配置

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

注:如果未修改以上配置,则在 KubeSphere 上无法对边缘节点的 Pod 查看日志和执行命令。

开启Metrics

此时边缘节点的CPU和内存等信息还未采集上来,需要开启Metrics

首先确保kubersphere的metrics_server是否开启

metrics_server:

enabled: true

编辑边缘节点里的配置

vi /etc/kubeedge/config/edgecore.yaml

修改如下配置

edgeStream:

enable: true #将“false”更改为“true”。

...

server: xx.xxx.xxx.xxx:10004 #如果没有添加端口转发,将端口修改为30004。

...

重启kubeedge

systemctl restart edgecore.service

配置Edge Mesh

边缘节点上常用命令

# 检查是否成功,之前没安装好docker导致出现问题,可以通过日志查看

journalctl -u edgecore.service -b

# 重启边缘节点上的进程

systemctl restart edgecore

Troubleshooting

-

cgroup driver 不一致,导致出错

journalctl 日志的时候,发现以下错误

init new edged error, misconfiguration: kubelet cgroup driver: "cgroupfs" is different from docker cgroup driver: "systemd"修改边缘节点上的配置

-

网络插件flannel或calico等Daemonset有强容忍度,会出现pending状态,使用Patch资源的方式来处理

#!/bin/bash

NodeSelectorPatchJson='{"spec":{"template":{"spec":{"nodeSelector":{"node-role.kubernetes.io/master": "","node-role.kubernetes.io/worker": ""}}}}}'

NoShedulePatchJson='{"spec":{"template":{"spec":{"affinity":{"nodeAffinity":{"requiredDuringSchedulingIgnoredDuringExecution":{"nodeSelectorTerms":[{"matchExpressions":[{"key":"node-role.kubernetes.io/edge","operator":"DoesNotExist"}]}]}}}}}}}'

edgenode="edgenode" # 只需要写一个边缘节点就行

if [ $1 ]; then

edgenode="$1"

fi

namespaces=($(kubectl get pods -A -o wide |egrep -i $edgenode | awk '{print $1}' ))

pods=($(kubectl get pods -A -o wide |egrep -i $edgenode | awk '{print $2}' ))

length=${#namespaces[@]}

for((i=0;i<$length;i++));

do

ns=${namespaces[$i]}

pod=${pods[$i]}

resources=$(kubectl -n $ns describe pod $pod | grep "Controlled By" |awk '{print $3}')

echo "Patching for ns:"${namespaces[$i]}",resources:"$resources

kubectl -n $ns patch $resources --type merge --patch "$NoShedulePatchJson"

sleep 1

done -

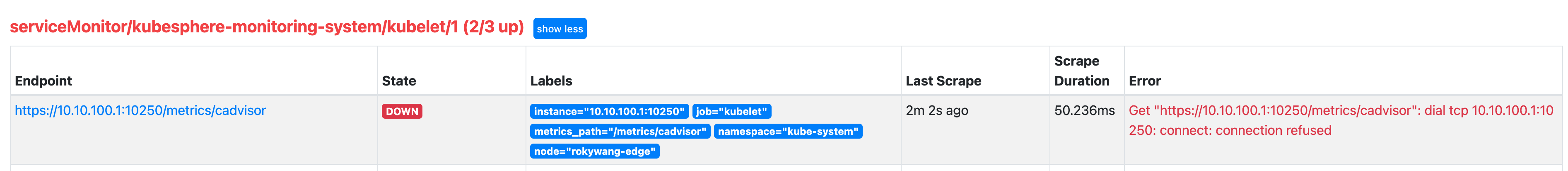

kubersphere后台无法显示边缘节点cpu和内存信息

在promethus自带后台里,查看targets

serviceMonitor/kubesphere-monitoring-system/kubelet/1 (2/3 up)

10250端口被10352替换,这个不清楚kubersphere有没有内部的处理

参考文档

https://kubesphere.io/zh/docs/v3.3/installing-on-linux/cluster-operation/add-edge-nodes/

https://kubesphere.io/zh/blogs/kubesphere-kubeedge-edgemesh/